What is velocity in an Agile project ?

Velocity is the total number of story points of stories which were completed in an iteration. Stories should be DONE by the team’s definition of DONE.

How do we account for partially completed stories in an iteration ?

In an iteration there will be stories which are partially completed. These stories should not be considered in calculating velocity for that iteration based on partial credit. These stories should be accounted in the velocity for the iteration in which they are actually DONE.

How does one plan velocity when starting a new project ?

When starting a new project, since there is no previous velocity to go by, there are a couple of options one can use

a. Ensure that at least a developer pair is available for a week to do a couple of Small/Medium stories from the release scope based on the technology stack proposed. A QA can then decide roughly how complex it is to test the story manually and also write an automation script for it, This should give the team some confidence on the technology proposed and also a rough likelihood of stories which can be completed within a week.

b. Do a raw velocity exercise. In a raw velocity exercise the team decides how many stories can it finish in an iteration period. This is done by repeatedly picking different sample sets of already sized stories which can be done within an iteration period. The total points across different picks are averaged and that is taken as the velocity the team will achieve each iteration. (For example if the result of 3 picks was 6,8 and 10 points for a 2 week iteration then (10+8+6)/3 = 8 points is the raw velocity for the team for 2 weeks. A schedule can then be laid out assuming the team finishes 8 points in a 2 week iteration.)

Either a. or b. or both can be done before planning velocity for a release, but all of these will only provide an indicator of the team’s velocity but the actual velocity of the team will only stabilize over time as the team learns more about the domain and the technology of the project.

Should we also measure velocity in parts such as developer velocity , QA velocity etc… ?

Velocity should always be measured by the definition of DONE of a story. Measuring velocity in parts is again saying that a story is partially done, which in itself does not have any meaning. In an ideal world a story is done when it is in production, but for accounting velocity, once should at least account for stories only when they are tested, showcased and accepted by the customer in a production like environment

Should we plan the same velocity even if the team is ramping up with new people ?

During the release planning exercise, one should also arrive at a rough staffing plan. This should show addition of people (Developers, BAs, QAs) staggered across various iterations in a project. Based on this, a ramp up velocity should be planned as the new people joining the team will have a learning curve ahead of them before they start contributing as effectively as the existing team members.

As an example if 1 pair of developers were doing 5 points in an iteration, then adding a new pair of developers to the team will not double the velocity immediately to 10 points, as the new developers will be learning more about the project in the first few iterations. So a staggered velocity of 5,7,10 for the 3 iterations maybe more realistic.

What should be done if the team does not achieve the planned velocity for the iteration ?

Before making any decision to change the planned velocity, one needs to understand the root cause of the problem. If the team set out to achieve 10 points in an iteration and could do only 6 points, what was the actual reason for not completing 4 more points. Was it because of poorly analysed stories, technical debt in a certain area, new technology/framework usage etc… If the root cause can be fixed in the subsequent iteration, such as adding an experienced business analyst to work with the customer closely to provide well analysed stories, the team can still set out to achieve the planned velocity in the next iteration.

However, if the team is consistently able to achieve only 6 points as an average over the last 3 iterations after exploring and eliminating all possible root causes, then it is sufficient to say that the average velocity of the team is 6 points. In this scenario, the first thing is to let the customers know the current team’s velocity, and make them understand the reasons behind it. The next thing is to look at project variables such as Scope, Time & Cost along with the Trade off Sliders and see which of the variables can be compromised without conflicting interest of project stakeholders. An example could be reducing the scope of the release by dropping a few nice to have stories if the trade off sliders indicated scope being a lesser priority than being on time and within budget.

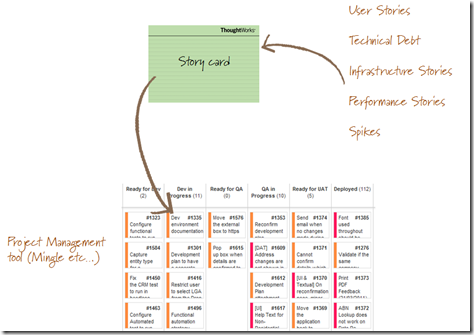

Do technical/infrastructure stories and spikes account for velocity in an iteration ?

Especially during the start of a new project, there will be activities such as setting up basic infrastructure for the project such as build scripts, continuous integration, environments etc… There will also be spike stories which need to be played to understand other frameworks and systems we need to work with. Since the team is spending effort in these stories and tasks, these should be accounted for in the velocity of an iteration. Hence any technical story or a spike should be estimated as all the other stories in the release and planned in the same manner.

The team also fixes defects in an iteration. How do we account for them in velocity ?

Defects can broadly be of 2 categories in an iteration.

The first category is of story defects which are defects on stories which have not yet been signed off by the QA team during an iteration. In this case, once the defects are fixed the story will get signed off by QAs and hence the story points associated with the story will account for the velocity.

The second category of defects will be because the application has regressed over a period of time and these defects are part of stories which were done in earlier iterations by the team. This can happen because of a missing safety net such as a unit or an acceptance test when the story was completed earlier. The team will have to spend effort fixing these defects but they do not account for velocity separately. If a team was doing 10 points in an iteration, having 4 such defects might let the team do only 8 points in that iteration. Hence the velocity is a clear indicator that regression defects are slowing down the team’s progress. Addressing the technical debt of test coverage around that area will ensure such defects are minimised.